By Kavita Dehalwar

Collecting data for a binary logit model involves several key steps, each crucial to ensuring the accuracy and reliability of your analysis. Here’s a detailed guide on how to gather and prepare your data:

1. Define the Objective

Before collecting data, clearly define what you aim to analyze or predict. This definition will guide your decisions on what kind of data to collect and the variables to include. For a binary logit model, you need a binary outcome variable (e.g., pass/fail, yes/no, buy/not buy) and several predictor variables that you hypothesize might influence the outcome.

2. Identify Your Variables

- Dependent Variable: This should be a binary variable representing two mutually exclusive outcomes.

- Independent Variables: Choose factors that you believe might predict or influence the dependent variable. These could include demographic information, behavioral data, economic factors, etc.

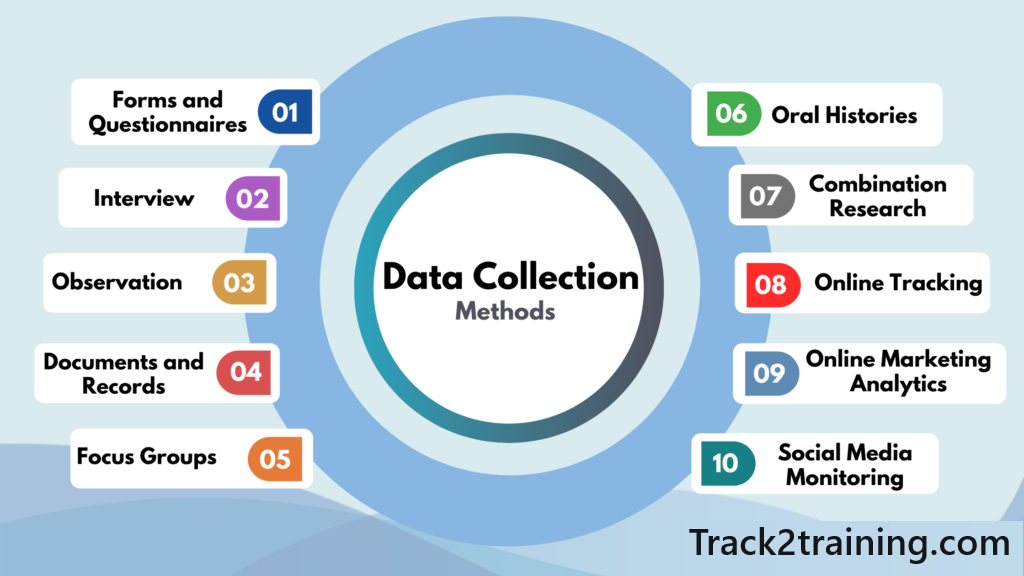

3. Data Collection Methods

There are several methods you can use to collect data:

- Surveys and Questionnaires: Useful for gathering qualitative and quantitative data directly from subjects.

- Experiments: Design an experiment to manipulate predictor variables under controlled conditions and observe the outcomes.

- Existing Databases: Use data from existing databases or datasets relevant to your research question.

- Observational Studies: Collect data from observing subjects in natural settings without interference.

- Administrative Records: Government or organizational records can be a rich source of data.

4. Sampling

Ensure that your sample is representative of the population you intend to study. This can involve:

- Random Sampling: Every member of the population has an equal chance of being included.

- Stratified Sampling: The population is divided into subgroups (strata), and random samples are drawn from each stratum.

- Cluster Sampling: Randomly selecting entire clusters of individuals, where a cluster forms naturally, like geographic areas or institutions.

5. Data Cleaning

Once collected, data often needs to be cleaned and prepared for analysis:

- Handling Missing Data: Decide how you’ll handle missing values (e.g., imputation, removal).

- Outlier Detection: Identify and treat outliers as they can skew analysis results.

- Variable Transformation: You may need to transform variables (e.g., log transformation, categorization) to fit the model requirements or to better capture the nonlinear relationships.

- Dummy Coding: Convert categorical independent variables into numerical form through dummy coding, especially if they are nominal without an inherent ordering.

6. Data Splitting

If you are also interested in validating the predictive power of your model, you should split your dataset:

- Training Set: Used to train the model.

- Test Set: Used to test the model, unseen during the training phase, to evaluate its performance and generalizability.

7. Ethical Considerations

Ensure ethical guidelines are followed, particularly with respect to participant privacy, informed consent, and data security, especially when handling sensitive information.

8. Data Integration

If data is collected from different sources or at different times, integrate it into a consistent format in a single database or spreadsheet. This unified format will simplify the analysis.

9. Preliminary Analysis

Before running the binary logit model, conduct a preliminary analysis to understand the data’s characteristics, including distributions, correlations among variables, and a preliminary check for potential multicollinearity, which might necessitate adjustments in the model.

By following these steps, you can collect robust data that will form a solid foundation for your binary logit model analysis, providing insights into the factors influencing your outcome of interest.

References

Cramer, J. S. (1999). Predictive performance of the binary logit model in unbalanced samples. Journal of the Royal Statistical Society: Series D (The Statistician), 48(1), 85-94.

Dehalwar, K., & Sharma, S. N. (2023). Fundamentals of Research Writing and Uses of Research Methodologies. Edupedia Publications Pvt Ltd.

Horowitz, J. L., & Savin, N. E. (2001). Binary response models: Logits, probits and semiparametrics. Journal of economic perspectives, 15(4), 43-56.

Singh, D., Das, P., & Ghosh, I. (2024). Driver behavior modeling at uncontrolled intersections under Indian traffic conditions. Innovative Infrastructure Solutions, 9(4), 1-11.

Tranmer, M., & Elliot, M. (2008). Binary logistic regression. Cathie Marsh for census and survey research, paper, 20.

Wilson, J. R., Lorenz, K. A., Wilson, J. R., & Lorenz, K. A. (2015). Standard binary logistic regression model. Modeling binary correlated responses using SAS, SPSS and R, 25-54.

Young, R. K., & Liesman, J. (2007). Estimating the relationship between measured wind speed and overturning truck crashes using a binary logit model. Accident Analysis & Prevention, 39(3), 574-580.

You must be logged in to post a comment.